Considerations for the Use of AI in the Creation of Lay Summaries of Clinical Trial Results

By Multiple Authors|Jul 16, 2025

By Multiple Authors|Jul 16, 2025

Abstract

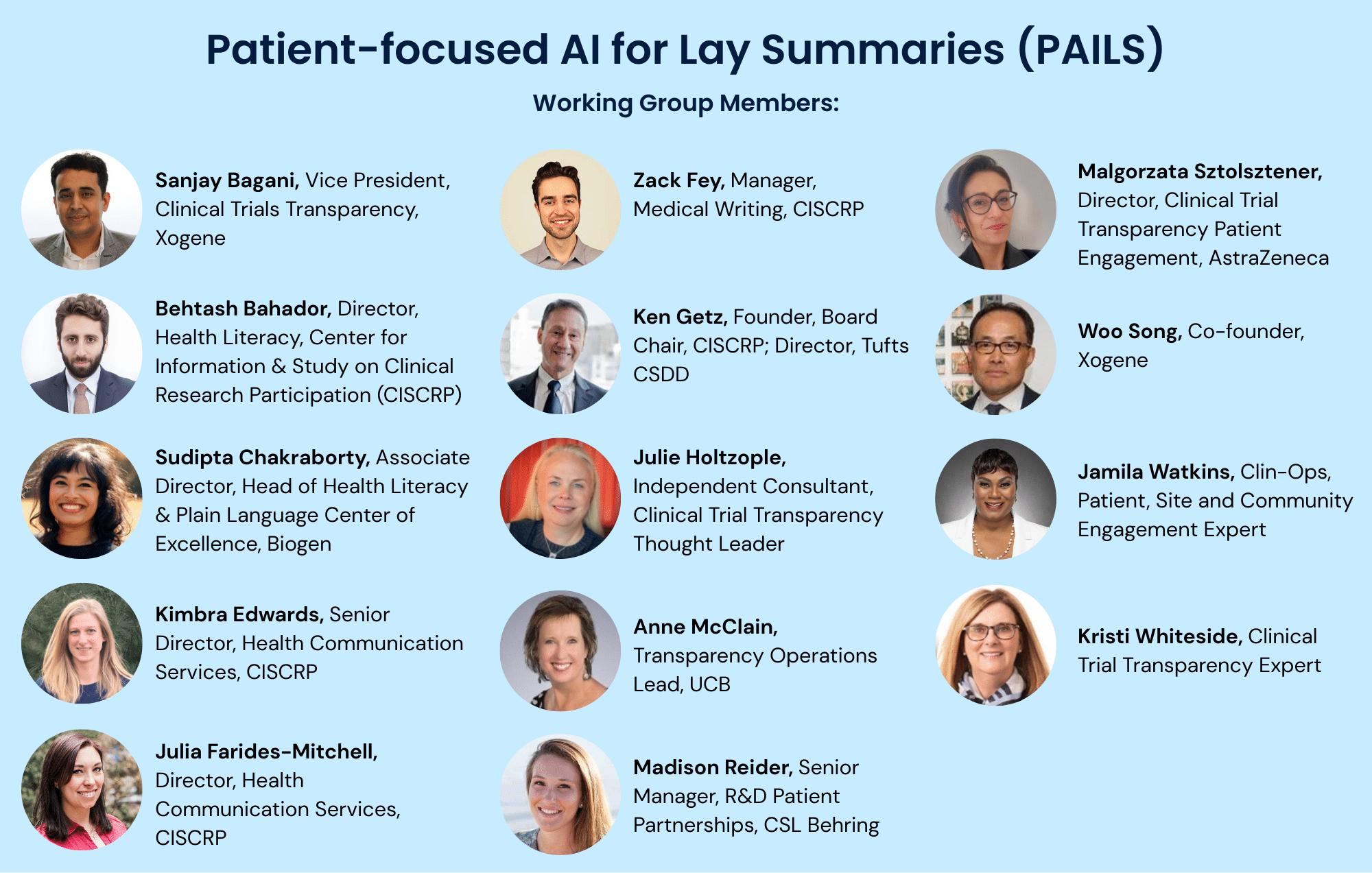

The clinical research landscape is constantly evolving, as new regulations and innovations come together to help accelerate scientific discoveries and medical advances. A prominent example of this is the rapidly emerging technology of artificial intelligence (AI). Using AI to develop lay summaries (LS) of clinical trial results can enhance transparency and accessibility, while maximising efficiencies and facilitating scalability. This document is a product of collaboration between experts from over 15 organisations in the US and the EU, including industry, academia, and a patient-focused nonprofit. It aims to explore how AI can be responsibly applied to LS development. While aligning with current industry standards, this document provides several recommendations for AI implementation that highlight the necessity of human oversight and expertise. This joint effort between human and machine can help LS achieve high standards in accuracy, transparency, and compliance, while building public trust and empowering patients to make informed healthcare decisions.

Medical Writing. 2025;34(2):74–81. https://doi.org/10.56012/vmag9372

Generative AI has the potential to streamline tasks, accelerate timelines, and expand access by reducing the resourcing needed to create a summary. However, AI alone cannot ensure accuracy, nuance, or cultural sensitivity. A hybrid approach, where humans guide, review, and revise AI-generated drafts, is essential.

The output of generative AI is only as good as the inputs. Thoughtful, specific prompts—including instructions on tone, reading level, terminology, structure, and disclaimers—can make the difference between usable and unusable drafts.

Transparent disclosure of how AI was used—and where human oversight occurred—is vital. It builds trust with patients and aligns with global regulatory trends, including the EU AI Act and FDA guidance.